Creating an email version of ChatGPT using OpenAI

Before we begin, I would like to say that this was not written by an AI 🙂

There have been so many posts about ChatGPT on my social media feeds. From guides on how to use it, to debates on copyright, viability of the information, use in the education system, etc...

We decided to experiment with it from an integration perspective and see if we could create something unique.

Enter EmailGPT!

With a little bit of prompt engineering and a lot of email parsing code, we were able to create a simple email responding bot that uses OpenAI’s API to respond to emails you send.

A few challenges

We have a few challenges on our hands:

Figure out a way to receive emails in a place where we can process them

Figure out a way to parse email contents with code

Some solutions

OpenAI API

Documentation on how to integrate is quite clear and easy to understand. They also provide client libraries in popular languages to get you quickly started.

Code

We chose Golang as our language of choice to parse emails and handle interacting with OpenAPI. It’s just personal preference, pick whatever you are comfortable with.

Prompt Engineering

Since the responses are by email, we need to tell the AI to behave as if it is responding to an email. This will feel more immersive when interacting with it. Here is an article explaining the concept.

Actually, most of the articles on how to use ChatGPT are a lot about prompt engineering for specific domains. If you don’t tell it how to answer, it will just pick what it feels is best and this can lead to the feeling that it does not give you the answers that you want.

Processing the emails

Since this is just an experiment and we will send emails once in a while to test it, it would be ideal to have a serverless setup. AWS provides a service called Lambda which essentially uses the “left over” compute power of its data centers to provide on demand processing. This means, lower costs and slightly slower response times since it needs to warm up before it responds. The trade off in latency is not a big issue in our case since we are dealing with emails and the fact that people don’t expect them to be real-time.

Receiving and sending emails

AWS Lambda is able to listen to an AWS SNS (Simple Notification Service) topic, which is in turn able to listen to emails coming from AWS SES (Simple Email Service). This has the potential to create a nice little pipeline of emails coming into our cloud function.

SES is also able to send email making it even easier to proceed.

Implementation

Step 1: Setting up an account on OpenAI

In order to start using the OpenAI API, you need an account. Create one, enter your card details and generate a token to get started. You pay as you go based on your usage of the APIs.

Step 2: Setting up our Go project

We set up a Go project and a repository for it on Github.

You then need to follow the AWS guide on setting up a working request handler. I few gotchas:

Make sure that your request handler function name and compiled executable, have the same name

When compiling your go executable, especially from Apple Silicon macs, prepend you build command with these args “GOOS=linux GOARCH=amd64 CGO_ENABLED=0” (AWS Lambda does not support ARM for Go at this point in time)

Make sure that your executable has the right permissions “chmod 644 ./handleRequest”

Make sure that you are compiling your executable from your “main” package and that it contains a “main” function which then calls your handler.

I would say that Golang is a bit trickier to set up than Python or NodeJS on Lambda.

We set up a function that could take a text prompt, send it to OpenAI and email the response.

Step 3: Parsing an email body

After you have your function set up and ready to accept SNS events, you arrive at a crucial step: parsing the data received from an email.

I discovered that email content can look pretty daunting.

Luckily, there is always a helpful post somewhere explaining how to go about doing such a thing. Email bodies can contain multiple parts with boundaries and content types. What we are interested in is the plain text parts. For the sake of time, I settled for focusing on “text/plain”.

Through some testing with various clients, we settled on a recursive function that was able to parse the email headers and find the nested parts until arriving at all “text/plain” content blocks.

With a Reader, you can arrive at a stage where you are able to retrieve all the headers.

Multiparts have boundaries which delimit sections and can contain other multiparts.

Upon identifying the content within these boundaries, we simply call our function within itself and provide the next chunk of content to process and it repeats the process until there is nothing left to process.

This allows for a higher success rate of our function by removing things that could make the API call fail. Things such as:

Staying within the limits of the request to OpenAI. There is a maximum amount of tokens which can be processed in a single request.

Providing content which was relevant to the request. Email signatures with images, attachments and replies can provide invalid data to the AI.

Step 4: Make it seem like the AI is responding to an email

The API just takes a prompt and responds, it has no idea of the context in which it is responding. We gave it some extra information for context and provided it with some details on its identity.

In these two sentences, there are a few instructions to guide the response:

“...as if you are responding to an email…”: This will help it write a greeting and sign off.

“...in a casual tone.”: We can control how formal it sounds.

“Your name is EmailGPT.”: This lets it know what name it should put at the end of the response.

We append the user’s input after this sentence so that the AI responds in a certain way.

There is more we could do here in terms of customization like adding combinations of tone, context and identity to modify how it behaves. We could also allow users to customize these settings to make the bot behave in the way that they prefer.

Step 5: Hosting

Due to the sporadic nature of what we are building (the fact that requests can be at random intervals), we went for a relatively simple serverless setup. This way, we only pay when emails are being sent and processed. Users won’t really notice the warm up time of an AWS Lambda function since you don’t expect email responses to be immediate.

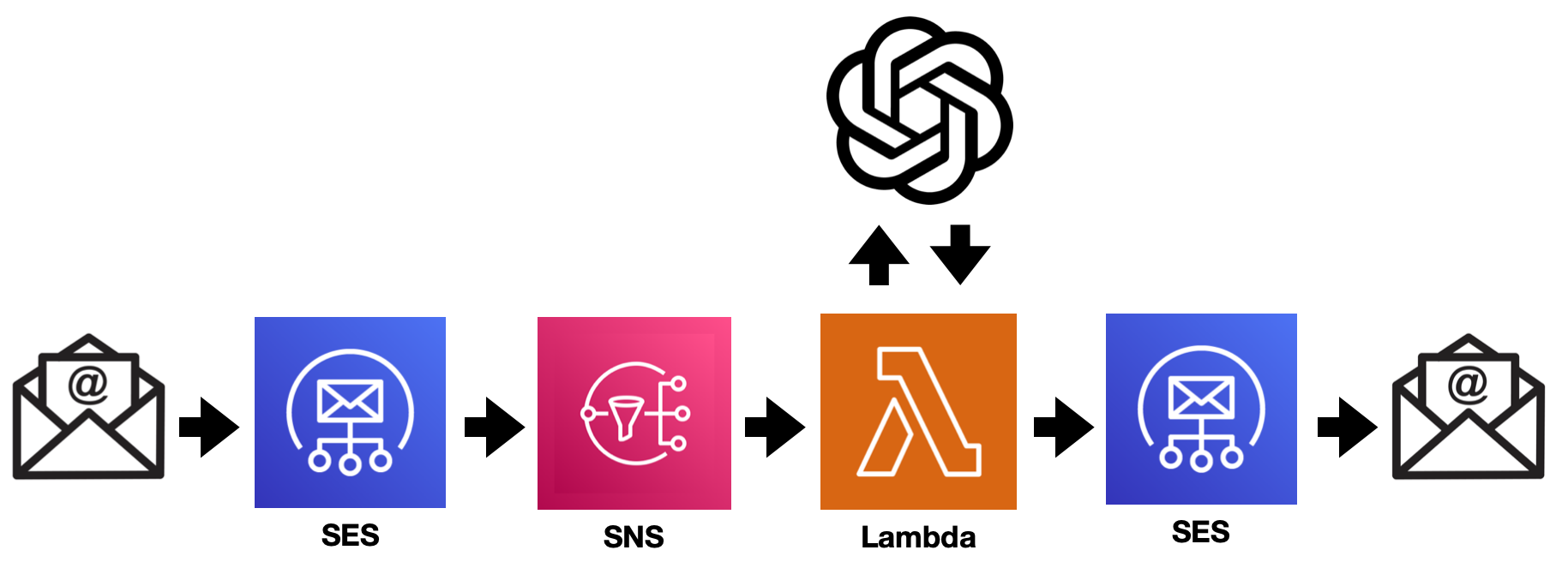

Just a brief explanation on the setup:

Emails are sent to ai@email-gpt.com

AWS SES receives emails from ai@email-gpt.com and publishes them to an SNS topic

When something is published to our SNS topic, it notifies AWS Lambda

AWS Lambda parses the data forwarded from SNS

A request from AWS Lambda is sent to the OpenAI API

OpenAI responds back to our AWS Lambda function with the response

AWS Lambda then uses AWS SES to send an email back to the email address which sent the email in the first place

Step 6: CI/CD with Github actions

Since our code is on Github, we set up a small action that runs our test cases, compiles the code and updates our AWS function.

It usually takes a bit of time setting things up, especially if you’re doing it for the first time, but the amount of time you save in the end is worth it.

You then start seeing your actions run on their own when you push code. It’s a great feeling, highly recommended!

Final thoughts

The hardest part of our implementation ended up being parsing emails in a reliable way so that OpenAI could make sense of our users’ requests. The APIs and various systems connecting everything together were easy to set up!

It’s insanely easy to start using ChatGPT, just open up the site and use an existing email account to sign up. It’s even easier to send an email.

I find it interesting that in order to conduct research for this article and build EmailGPT, I did not use an AI. I felt more comfortable searching the web and determining for myself what information I needed. A metric for success of ChatGPT could be getting someone like myself to feel comfortable enough doing this by asking an AI instead.

There is a limitation with our implementation: the context of your request by email is limited to your email. You cannot carry out a conversation in the same way that you can do so with ChatGPT. Which means that you would have to provide context to your request and potentially summarize that context when the responses grow. Here is an article which analyzes the effects of providing context to requests.

How we can help

This is just one example of a type of integration with OpenAI. It can be adapted to instead provide a little AI chat widget on your website or your app.

If you would like some help with that, feel free to contact us!